The data center has become the modern-day warehouse of the most important asset in the digital age, which is data.

Over the years, data centers have changed from an expensive server in a closet, to an offsite cluster of servers, to a worldwide web of integrated centers called "the cloud".

Here are some of the most important data center knowledge need to have in 2018 and beyond.

Changes in Data Center Sizes and Software Being Used

These centers have grown by leaps and bounds not only in their size but also in their sophistication. There are so many ways in which current data centers are far superior to those of just a decade ago.

For example:

They run on more efficient software, there are virtualization engines to run redundantly, high connectivity, there are advanced security features, cooling methods and much more.

However, the next decade will see even more advancements in data centers. Is your firm prepared for those advancements? Whatever you do, make sure you locate nearby, cutting-edge centers at datacenters.com.

There are a few key advancements every executive should be aware of.

MicroData Centers

The days of massive data centers in the middle of the desert might be about over. Today, many companies depend on speed to make their business processes work.

Why does this matter?

That means they need to access data servers that are located in the heart of central business districts. That is especially for businesses that deal in low latency transactions like banking, trading, some energy, and IT companies.

Rather than build massive data centers in these locations that would be prohibitively expensive, firms are now building micro centers that store the most readily accessible data.

Interestingly, automotive companies are now demanding low latency, microdata centers as well. That is because self-driving and internet connected cars need constant access to data.

Here is the bottomline:

When data is disconnected even for a couple of seconds, it could result in a car going out of control.

Of course, more and more industries will find this same issue in the future. That will lead to more microdata centers that can provide real-time access 24/7.

Next up, we discuss hybrid computing and why your firm should consider having a hybrid strategy.

Hybrid Computing

Companies will connect to a hybrid network of data centers in the future, representing the different needs they have at different times.

Connected medical devices and manufacturing robots have very different data needs than a stock market trade or an e-commerce purchase.

The split will be between hyperscale (centralized) and edge (decentralized) computing will become more important.

Now:

Firms will make decisions about which data they want at hyperscale speeds and which they can accept at decentralized speeds. Hyperscale ones will come with a price premium while decentralized data prices will tend towards zero.

Those data nodes can be held in pieces on one of hundreds of thousands or millions of devices around the world. Or they can be at large, secure, professional data centers located far from business districts.

They guarantee up-time and reliability but do not have relatively low connectivity speeds.

The best part?

Some devices actually benefit from these decentralized server centers. For example, drones operate at the "edges" of cities, towns and even rural locations. They need to access a much more decentralized network of data.

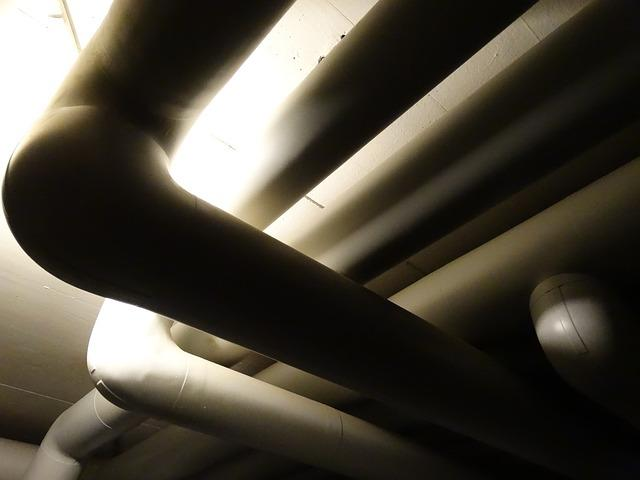

To control the heating in data centers, liquid cooling is being used. We discuss this in the next section.

Liquid Cooling

One of the biggest factors holding data centers back from being even more compact and efficient is cooling. Data centers that overheat tend to slow down the clock speed of their machines which results in a slower internet and connection for everyone.

Engineers are constantly working on ways to improve cooling at data centers.

What is liquid cooling?

Liquid cooling is a new technology that micro targets chips to make sure they are cooled rather than the entire open space of the center. That allows much better energy efficiency for the same amount of chips.

Server level sealed loop technology also contains the cool air and carefully releases heat from a sealed loop.

That makes the server act more efficiently. Ultimately this helps to increase sustained throughput, improve the density of server clusters and diminish energy consumption.

And speaking of efficiency, data centers are now experimenting with DNA storage to store more data in less space. For more on this, read the next section.

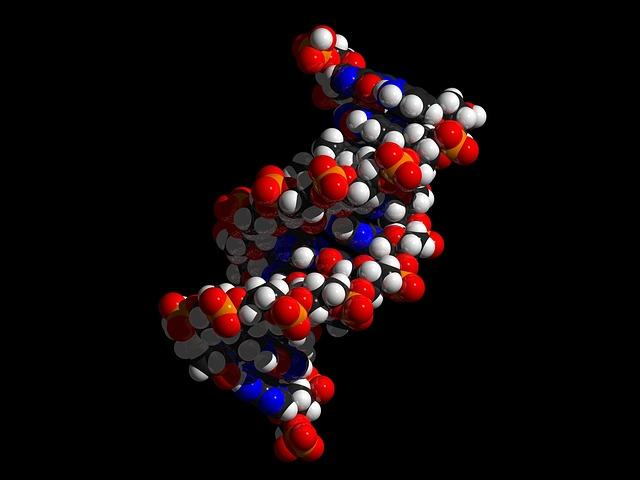

DNA Storage

Data needs are growing so quickly that the current infrastructure cannot keep up with the pace of change. The current set of data centers worldwide would only be able to hold 3% of the total data necessary in 2030.

For that reason, researchers are looking for radically new ways to organize data centers that don't require as much new infrastructure. DNA storage may be one way of achieving this goal.

Check this out:

DNA Storage is based on the amazing properties of our own DNA. A tiny spec of DNA can hold the equivalent of 10 terabytes of data using biological principles to ignore most of the data that is not being used at one time.

If digital data could be reformatted to fit into the nucleic acid sequence of DNA, the space problem would be solved and virtually infinitely more data could be stored in the existing space that is being used today.

While some elements of DNA storage have already been validated in labs, it is not yet ready for prime time commercial use.

Machine Learning and Artificial Intelligence

Data centers are now being equipped with smart software tools to better administer and pro-actively solve problems before they occur. This is one of the most important data center knowledge you need to keep in mind.

This software, deemed machine learning or artificial intelligence learn from the activity in the center over time and make changes to maximize performance.

Here is an example:

A machine learning data center will learn to anticipate which servers need to be cooled ahead of time so that they function at peak speeds during times where they have the most demand.

That improves the speed, efficiency and energy use of the entire data center over time. It also occurs without any human intervention besides installing the software in the first place.

Similarly, machine learning tools get much better at defending the center through pro-active measures to avert DDOS attacks, malware, and other issues.

They respond much quicker than any human and help keep client data secure at all times.

Summary

Over the next decade, data centers will change rapidly. The increasing demand for data storage is simply unending.

Companies, individuals, the government and NGOs will all continue to demand access to safe, reliable, fast data at all times. New data center technologies and infrastructure will hopefully resolve this important demand.